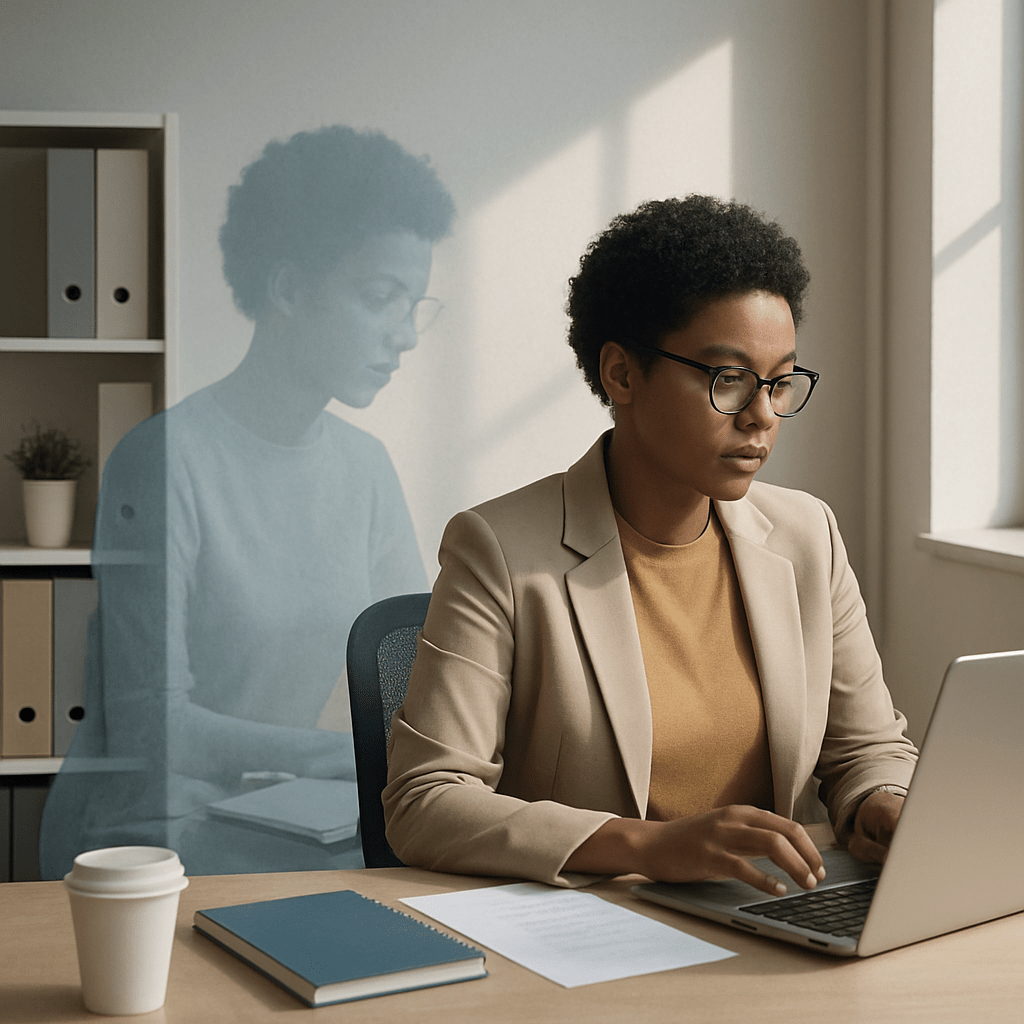

Last month I spoke with a mid-career professional who quietly confided that they’d been relying on a generative AI tool to draft client reports. They kept it to themselves, not out of malice, but because they feared judgment. “If my manager knew,” they told me, “I’d look lazy, or worse, incompetent.” This is the new and growing phenomenon of “AI shame.”

The hesitation to acknowledge AI use has ripple effects. First, it forces individuals to shoulder risks including intellectual property exposure, compliance issues, or simple factual errors, in isolation. Secondly, it erodes trust. Team members suspect “shadow AI” but rarely speak about it openly. When organizational silence surrounds powerful technology, both innovation and accountability suffer.

Shadow AI in Practice

Despite the lack of comprehensive policies to guide workers, some generative AI tools have already been embedded in individual daily workflows, without formal approval. Employees quietly turn to chat-based assistants for emails and reports, use AI content generators for presentations, and leverage code-completion tools for programming tasks. A recent industry survey by the audit and consulting company Deloitte found that more than 60% of knowledge workers admitted to using AI without disclosing it to managers or compliance teams. Even more candidly, anecdotes abound: consultants drafting client deliverables through AI, analysts reworking financial summaries, or healthcare administrators experimenting with draft patient communications.

At one level, this reflects ingenuity: professionals looking for efficiency or new solutions. At another level, it fuels unease. When people believe they must hide their work tools, they create silos of secret practice. Managers begin to worry whether output is compliant. Trust wanes, not only between supervisors and employees, but also within teams who suspect unequal advantages. Employees, for their part, carry anxiety, knowing their undisclosed AI usage could be viewed as rule-breaking if revealed. That cumulative tension is corrosive for culture and for outcomes.

The Legal Conundrum

Perhaps the most uncomfortable reality is this: AI cannot be held liable for harmful outcomes. Algorithms, however powerful, are not recognized as legal entities or clothed with legal personality. They cannot owe a duty of care, nor bear responsibility for professional negligence, misrepresentation, or breach of contract. That responsibility falls squarely on human actors, employees and, ultimately, their employers.

This asymmetry creates a profound governance challenge. If an AI-generated contract clause results in litigation, the organization, not the tool, is accountable. If an AI-generated financial analysis contains errors, regulators will hold executives responsible, not the model provider. In healthcare, a misapplied AI summary could pose patient risks, with liability attaching to practitioners and institutions. For professional services industries built on trust, law, finance, engineering, healthcare, the reputational cost of AI misuse may exceed the legal one. Clients expect accuracy, accountability, and assurance. The presence of “shadow AI” undermines all three.

What complicates matters further is intent versus negligence. An employee may not intend harm, but their secrecy creates organizational exposure. Without explicit policy, responsibility defaults upward: leaders carry the liability for errors they did not even know were happening. This mismatch between technical reliance and legal accountability is unsustainable.

Regulators are now moving quickly to address these risks. In 2025, the EU AI Act became the world’s first comprehensive law governing AI use. It bans certain high-risk systems outright (like behavioral manipulation and social scoring), imposes documentation and oversight requirements on general purpose models, and places clear liability on organizations and professionals, not on AI providers. All high-risk AI systems (including those used in legal, healthcare, and financial services) must pass pre-market assessment and ongoing monitoring, with the right for affected individuals to file complaints with national authorities.

In the United States, the SEC has launched an AI Task Force, signaling stricter surveillance and enforcement in markets. The agency is leveraging AI for compliance and investigations, and expects organizations to do the same for internal controls. State-level laws (like Colorado’s CPIAIS) require “reasonable care” to avoid discrimination in algorithmic decisions, including hiring and promotion, with mandatory reporting of issues and annual impact assessments.

At this point in time, responsibility stays with the humans who design, deploy, or oversee AI. If a system fails or causes harm, courts and regulators will hold humans accountable, not the algorithm.

Governance Imperatives

If organizations are to harness AI responsibly, they must move beyond denial and institute frameworks of governance. This involves more than IT controls, it requires explicit policies, cultural openness, and aligned accountability structures.

First, policies should delineate approved tools, usage scenarios, and guardrails around data security and intellectual property. Being clear about what is permissible reduces the temptation to operate in the shadows. Secondly, organizations must make efforts to promote transparency through AI literacy programs, embedded into onboarding, training, and continuing professional development. AI fluency initiatives can help demystify these tools, minimize stigma, and empower employees to disclose usage.

Culturally, the shift must be toward normalizing responsible AI rather than vilifying it. That means managers asking how team members achieved results, not just inspecting outputs. It means celebrating thoughtful AI application as a skill rather than treating it as a shortcut. Compliance protocols, audit trails, and mandatory disclosures create a safety net. By making the invisible visible, organizations recapture trust, and remain compliant with new legal obligations.

Strategic Recommendations

How, then, can executives turn risk into resilience? A practical checklist:

- Policy: Define approved AI tools and explicit use cases.

- Training: Build ethical AI literacy so employees know both benefits and limits.

- Monitoring: Establish disclosure practices, audit trails, and compliance checks.

- Culture: Reduce AI shame by making usage transparent, encouraged, and celebrated when responsible.

By introducing these elements, organizations can minimize risk and unlock performance. A responsible adoption framework protects reputation and liability, while also accelerating innovation and productivity. The very practices that keep organizations safe also make them stronger.

6. Closing Reflection

AI is here to stay. Its potential is extraordinary, but its accountability is not transferable. We cannot outsource responsibility to an algorithm, no matter how advanced. The organizations that will excel in this new era are those that encourage transparency, equip employees for responsible use, and reaffirm that accountability ultimately rests with people. The future of AI at work isn’t simply about what machines can do. It’s about how humans choose to govern and own the outcomes.